A Day in the Life of a Data Annotator: Behind Computer Vision

Duy Pham | June 4th, 2025

Matroid builds no-code computer-vision detectors that can spot everything from microscopic material defects to real-time safety hazards on a factory floor. But “no-code” doesn’t mean “no humans.” Every model we deploy is the result of seamless teamwork between our engineers—who design the algorithms—and our data annotation specialists—who teach those algorithms what the world actually looks like. This series, The Visionary Mind, pulls back the curtain on that partnership so readers can see how human insight and machine precision combine to push computer vision forward.

When people think about AI that can “see,” whether it is a self-driving car, a medical imaging system, or intelligent surveillance, they usually picture the end product. What they do not see is the massive amount of human effort behind the scenes. Data annotators are the ones who carefully label images, videos, and everything in between so that machines can actually learn.

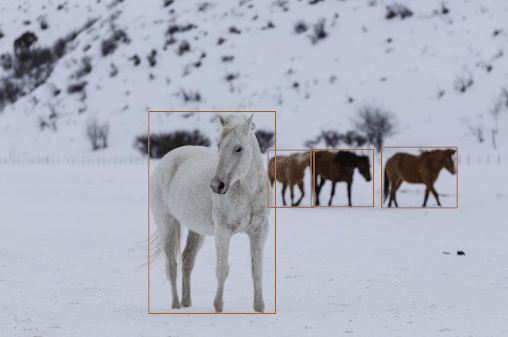

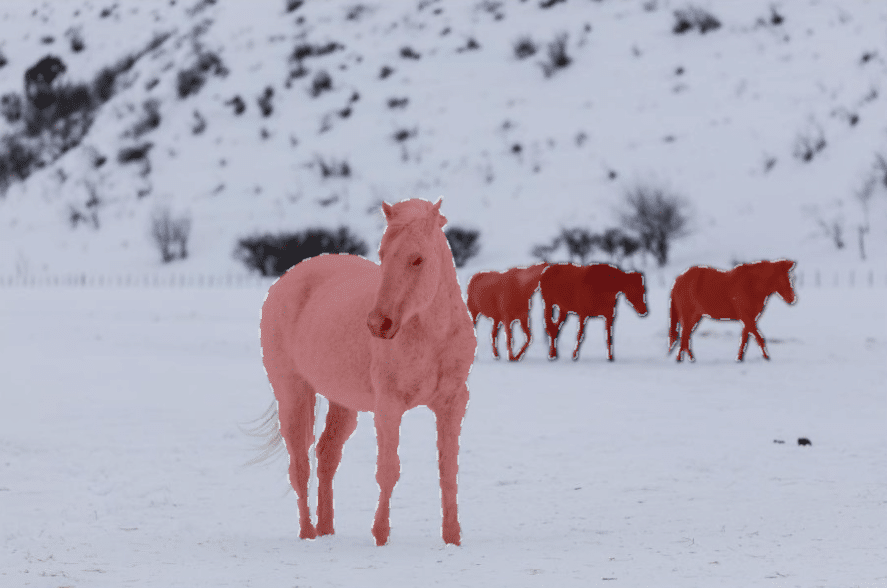

Think of annotation as digital apprenticeship: every bounding box, polygon, or class label is a tiny lesson we hand to the algorithm, saying, “This is what matters—and here’s why. Data annotation is not only about drawing boxes. Some days, it means painstakingly outlining tiny details pixel by pixel. Other days, it means making judgment calls in messy real-world scenarios.

Those judgment calls are where the human element shines. A streetlight obscured by fog, a surgical implant partially hidden by glare—these are puzzles that require intuition, context, and sometimes heated team debates. We’re not just labeling pixels; we’re encoding collective experience so future detectors can make life-or-death distinctions in milliseconds.

One of the best parts of this work is seeing its real-world impact. Our team has contributed to projects across various industries, including aerospace, healthcare, and security. While we can’t publicly discuss the specifics, one project that stands out involved a client in the security sector. We labeled hundreds of thousands of images, including rare and challenging scenarios, training the AI not only to detect objects but also to understand context, such as identifying early signs of potentially dangerous situations. Knowing that our work could help prevent real-world incidents is what makes it worth it. For instance, picture a crowded transit hub at rush hour: a single unattended backpack appears beneath a stairwell, partially hidden by shadows and foot traffic. A typical camera feed would record the scene passively, but our trained detector flags the bag within seconds—distinguishing it from harmless luggage by cross-referencing behavior patterns (no accompanying person, stationary for too long) and environmental cues (proximity to restricted zones). Security personnel receive an alert before most commuters have even noticed the risk, giving them precious minutes to investigate and, if necessary, evacuate. Scenarios like this—entirely hypothetical yet technically feasible—show how context-aware annotation turns raw pixels into early warnings that can save lives.

Moments like these remind us that annotation isn’t busywork—it’s an act of stewardship. Every false negative we eliminate could be a prevented accident; every edge case we anticipate could keep critical environments safer or a patient healthier.

Of course, there are challenges. Visual data in the real world is messy. Objects are not always apparent. Lighting can be terrible. Sometimes, you are making split-second decisions about whether that blurry figure is a person, a signpost, or just a strange shadow. It not only requires precision but also demands a lot of critical thinking and situational awareness.

Consistency is another non-negotiable part of the job. Small mistakes might not seem like a big deal at first, but even tiny inconsistencies can seriously confuse machine learning models. It is a role where attention to detail is not only appreciated but also essential, especially when working with datasets consisting of hundreds of thousands of images.

The field is also evolving quickly. New tools are constantly emerging, including semi-automated platforms that suggest annotations or pre-label parts of images. They make the work faster, but they also add a new layer of responsibility. We are not just labeling anymore. We are quality control for the machines, making sure their guesses are accurate and grounded in reality.

Despite the challenges, it is an exciting place to be. Computer vision is expanding rapidly, and the applications are becoming more ambitious, from diagnosing diseases earlier to creating more innovative augmented reality experiences. While AI tends to get the spotlight, it is the careful, often invisible groundwork —the thousands of labeled frames —that makes it all possible. In other words, if AI is the orchestra, annotation is the sheet music. Remove it, and all you have left is noise.

Looking ahead, I’m excited for what’s next. As the field continues to grow, so does the importance of the work behind it. No matter how advanced AI becomes, it still needs people to guide it, correct it, and teach it how to make sense of the world. Being part of that foundation is not just rewarding; it is a reminder that real progress always starts with the details.

About the Author

Duy is a Data Annotation Specialist at Matroid. He first became interested in CV because of his photography hobby.

Building Custom Computer Vision Models with Matroid

Dive into the world of personalized computer vision models with Matroid's comprehensive guide – click to download today