How xDS Makes Replicated Inference Possible and Reduces Downtime in Stream Monitoring Systems

Philip Wu | May 1st, 2025

Stream monitoring systems are expected to operate around the clock, detecting anomalies, tracking movement, and maintaining continuous visibility across environments like airports and manufacturing floors. In these high-stakes applications, even short interruptions can lead to missed detections, operational slowdowns, or security risks.

Yet, despite having powerful deep learning models and scalable infrastructure, reliability often remains elusive. Systems falter not because of flaws in the models, but due to brittle deployment architectures—single points of failure, fixed IP targeting, or delayed response to service interruptions.

We encountered this firsthand while deploying real-time inference services in a Kubernetes environment. Our workloads process multiple frames per second, 24 hours a day, and while the underlying models were fast and accurate enough to keep up, that isn’t sufficient – the serving infrastructure also needed to be highly available.

The challenge wasn’t about scaling inference but about making it resilient.

The Naive Setup: Static Assignments and Single Points of Failure

In our Kubernetes deployments, each GPU-powered server hosts a single Triton inference pod, and each pod serves multiple models via gRPC. The simplest approach has each stream monitoring task send inference requests directly to a fixed IP—the address of that single pod hosting its model.

This setup works great – as long as your servers can run forever without crashing or restarting or needing updates. Of course, that isn’t realistic. Even during routine updates, restarting a pod to roll out a new image would cause inference to pause. With a naive system like this, customers would potentially lose detections for minutes at a time.

It was clear that this wouldn’t be sufficient: we needed redundancy. But you don’t get redundancy by running two copies of your models. Redundancy is about ensuring your system knows which pods are alive and serving which models; which GPUs can take traffic, and how best to distribute requests to them in real time.

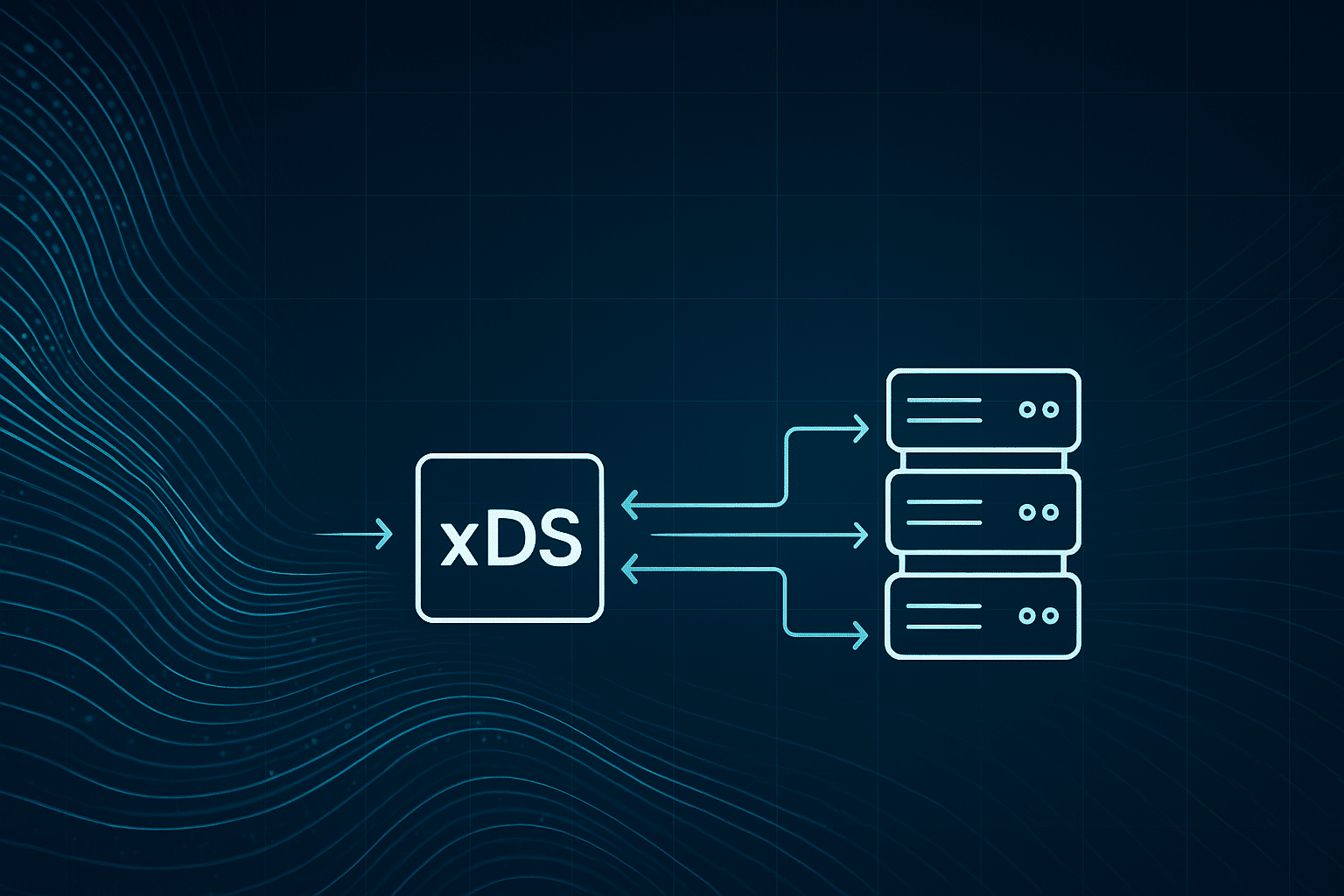

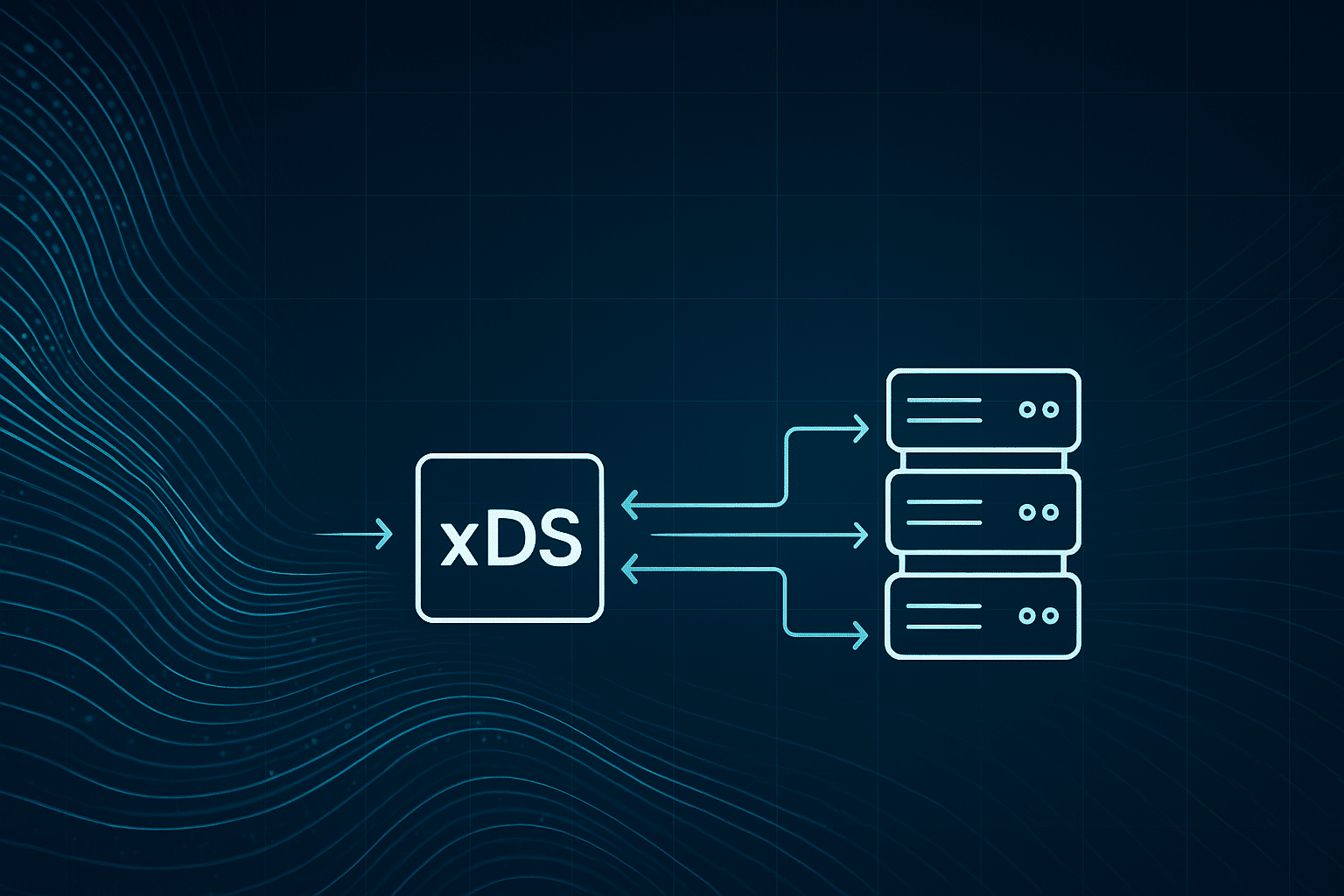

The Resilient Setup xDS: Dynamic Discovery for Dynamic Systems

The solution came from a powerful tool: xDS, or Extensible Discovery Service. Originally built for Envoy, xDS is a family of dynamic configuration APIs—Cluster Discovery Service (CDS), Endpoint Discovery Service (EDS), Listener Discovery Service (LDS), and Route Discovery Service (RDS).

These APIs allow a control plane to configure the data plane in real time. That means your system can discover which services are running, where they live, and how to route traffic between them—all without restarting or hardcoding anything.

In our architecture, we built an xDS control plane that manages a live mapping of:

{ detector_id: [serving machine IPs] }

Each stream monitoring task connects to this control plane. When it needs to perform inference, it doesn’t call into a hardcoded pod—it relies on xDS to know which replicas are currently alive and ready. Requests are automatically balanced between multiple replicas per detector model.

If one pod goes down, the xDS service stops routing traffic to it. The second pod hosting the model keeps handling requests. The customer never notices a blip.

Why xDS Enables True Replication

xDS solves three key problems that static systems struggle with:

1. Dynamic Discovery: When a pod starts or stops, xDS updates the endpoint list immediately.

2. Load Balancing: Requests are routed intelligently between replicas, reducing overload risk.

3. Health Awareness: If a pod is marked unhealthy, it’s instantly removed from the serving pool.

With this setup, we achieved:

- Zero-downtime deployments

- Resilience against instance and GPU failures

- Simplified routing logic for monitoring tasks

All inference pods implement standard Kubernetes health checks. xDS watches those signals to decide what’s live and what’s not. If a pod gets updated or crashes, xDS reroutes traffic before a single frame is lost.

From Fragile to Fault-Tolerant

Incorporating xDS gave us the tools to stop treating inference as a static service and start treating it as a dynamic, distributed system. xDS gave us the language, tools, and flexibility to replicate every part of it.

Now, when we get an alert about Stream 42, it’s never because the infrastructure went down. It’s because we finally have the bandwidth to monitor something new.

About the Author

Philip Wu is a Software Engineer at Matroid. When he’s free, you’ll probably find him watching or playing sports.

Building Custom Computer Vision Models with Matroid

Dive into the world of personalized computer vision models with Matroid's comprehensive guide – click to download today