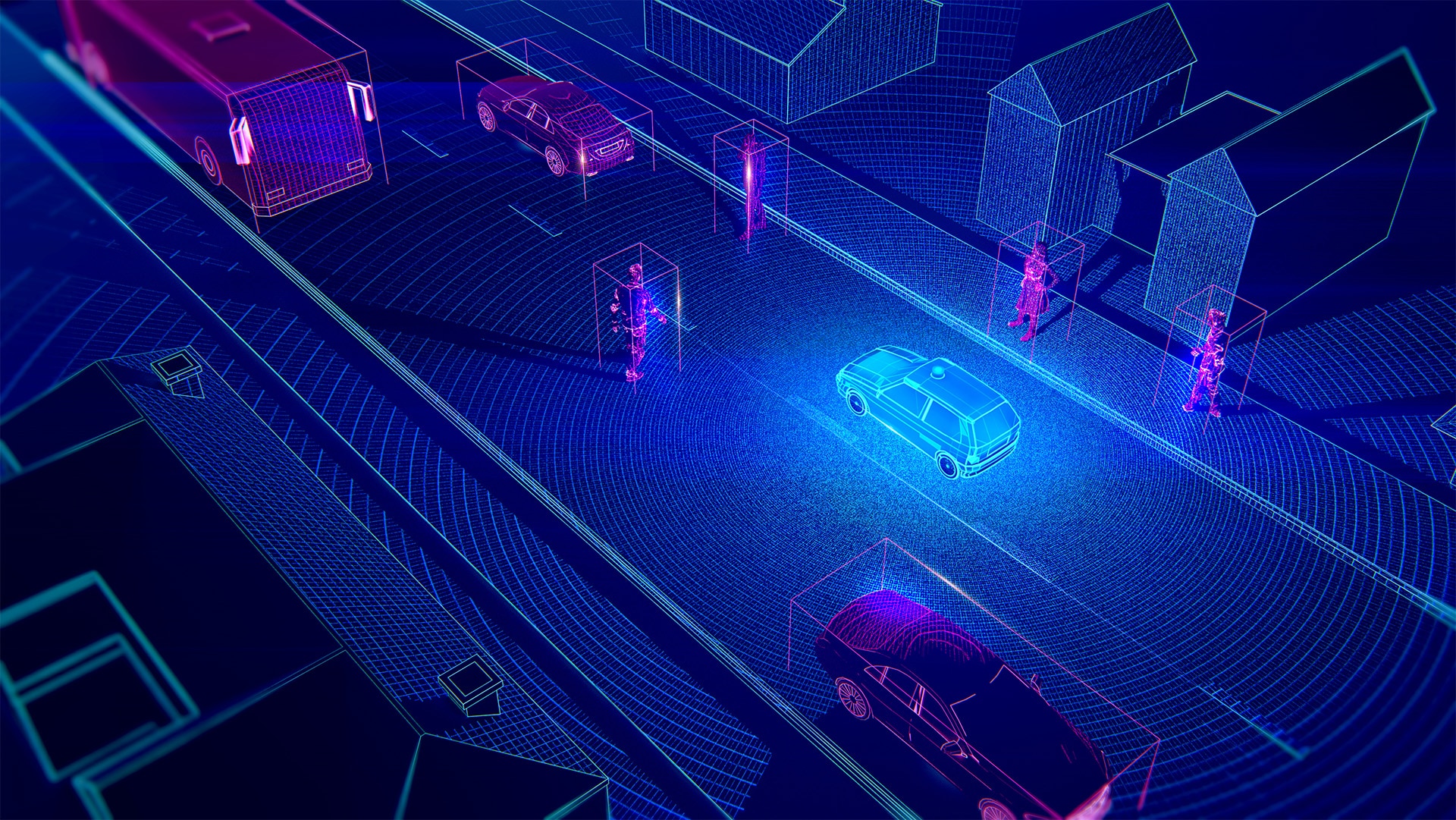

Managing User-Facing Complexity in Computer Vision Applications

John Goddard | May 6th, 2025

Designing user experiences (UX) for no-code platforms has always been about making complex tasks accessible. However, when it comes to computer vision (CV), the challenges multiply. Unlike traditional software applications, where user actions have immediate and predictable results, CV workflows introduce inherent conceptual and interaction complexities that require a different UX approach.

The Complexity of CV UX

No-code computer vision platforms aim to let users create powerful models without deep technical knowledge. However, these tasks can often involve high levels of complexity, such as:

1. Selecting an Annotation Strategy

Annotating data for computer vision datasets is a nuanced process—both intuitive and counterintuitive. While users generally understand the objects or defects they are looking for, translating that intuition into a structured annotation strategy that yields high-quality model performance is not always straightforward.

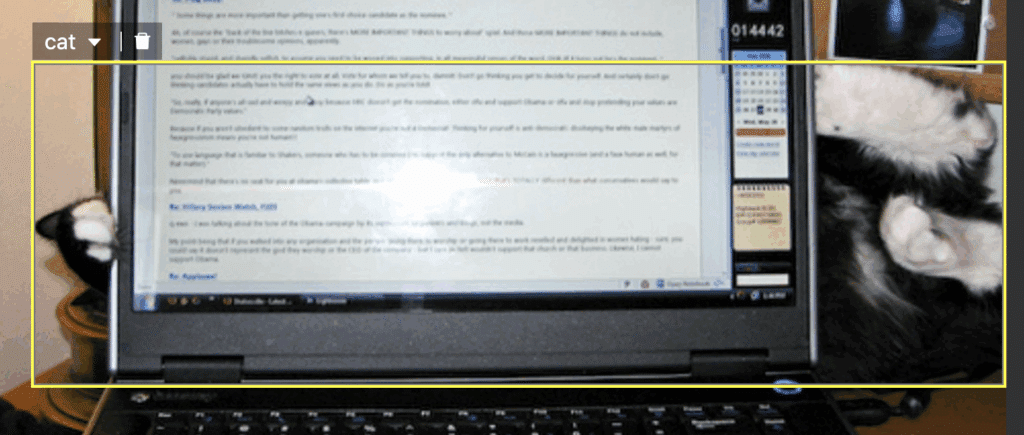

Unlike traditional UI interactions, computer vision annotation requires users to make critical decisions: What defines an object? Should it be annotated with a bounding box, segmentation mask, or classification tag? How can consistency be maintained across a dataset? These choices, often subjective, can significantly impact model performance.

For instance, when identifying scratches on a part, one user might annotate only the scratch itself, while another might label the entire part as ‘defective.’ These differing approaches can lead to vastly different model behaviors, highlighting the importance of a well-defined and standardized annotation strategy.

Different annotation styles for the same image of a partially obscured cat: (top) bounding box across the occlusion, (middle) splitting up the bounding boxes, (bottom) segmentation masks.

2. Time-Consuming Interactions and Edge Cases

Annotation can involve time-consuming user interactions such as polygon or freeform boundary drawing. While these interactions are not uncommon, they can be tricky for users to complete quickly and consistently. Additionally, even with a pre-defined annotation strategy, edge cases may pop up during the annotation process, such as how to handle partially obscured or grouped objects.

Figure: Different annotation interactions: (top) Click and drag bounding box, (middle) drawing segmentation masks, (bottom) prompting a vision foundation model.

3. Managing Large-Scale Tasks

Depending on the level of model performance required, datasets may contain tens or hundreds of thousands of images (in some cases, much more). Even with simple bounding box annotations, these annotation tasks often require multiple users and countless hours of work.

For many users looking to leverage CV, this requirement can be prohibitive without assisted workflows.

4. Delayed Feedback and Uncertain Outcomes

Unlike traditional interfaces, where users immediately see the effect of their actions, CV workflows may not provide instant feedback. For example, annotating thousands of images before training a model means users won’t see results until much later.

This deferred feedback makes it difficult to pinpoint where things may have gone wrong in the process due to the multitude of steps between dataset creation, annotation, and training.

5. Complex Evaluation

Evaluation approaches for computer vision models often come down to a choice between jargon-heavy, imprecise, or time-consuming methods. For technical users, traditional model metrics such as mAP can give a quick snapshot of how a model is performing and if it is improving over time, but for non-technical users the specific mAP scores do not mean much (or could be misinterpreted as a performance percentage, at the time of writing this would mean state of the art object detection models are only getting a solid D grade).

Alternatively, just running the trained model may take a long time to validate for rare events and be an incomplete metric because we will not be able to see all of the objects the model missed, as they were missed.

UX Strategies to Reduce Complexity

Given these challenges, effective CV UX design must introduce tools that assist and guide users. Matroid’s platform employs several strategies to streamline the process:

1. AI-Assisted Annotations

Leveraging existing models to assist with or replace annotation tasks can dramatically speed up workflows. Vision foundation models such as (SAM/SAM2) or (OWL) have been trained to have a broad visual understanding of common objects, such that they can be combined with minimal prompting to annotate objects quickly. For many use cases, this can make the annotation process much more intuitive. However, these foundation models may struggle with certain uncommon or irregular objects, such as cracks and defects.

Figure: Rapid annotation based on text prompts.

2. Progressive Disclosure for Complexity Management

Instead of overwhelming users with all options at once, progressively revealing advanced features as needed keeps the experience manageable.

For non-technical users, the detector creation process should be kept as simple as possible (select the type of annotation, annotate, and start training). Implementing reasonable presets for other more advanced settings is essential for helping these users still create performant models.

For users who have more experience, additional settings and options can be exposed, such as the choice of model architecture and data augmentation pipelines.

3. Annotation Validation and Model Iteration

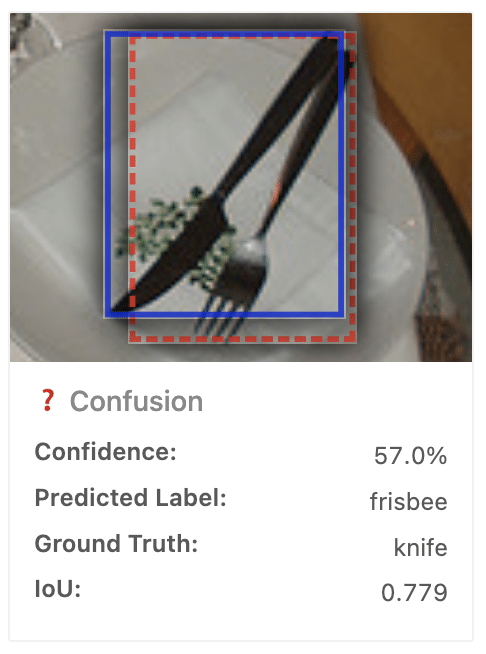

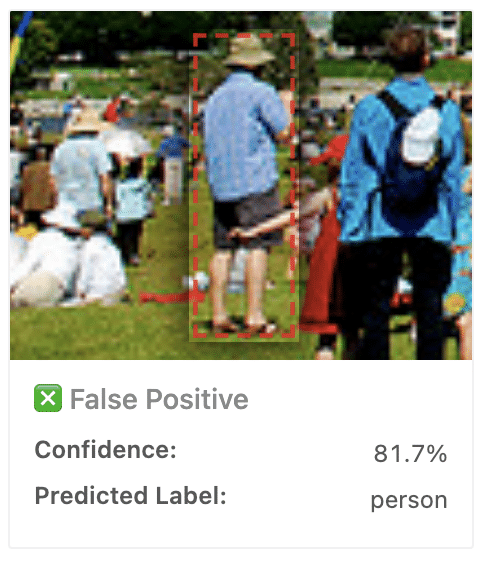

Evaluating model performance can be greatly simplified by incorporating visual interpretations of both model and annotation errors. A clear, intuitive interface allows users to quickly assess where discrepancies arise, making it easier to refine both model predictions and annotations. Additionally, an interactive confusion analysis tool enables users to explore areas where the model and annotations agree on an object’s presence but differ in classification.

The feedback loop can be tightened by providing checkpoint mechanisms while annotating. By training a lightweight model on the currently annotated subset of the dataset, then allowing users to validate that the model is getting better as they increase the number of annotations, we can reduce the feedback length from once per full dataset annotation to as many times as needed.

Reviewing model performance: (left) example of a model prediction with an incorrect label, (right) example of a model prediction where there should be a ground truth annotation.

Conclusion: Simplifying the Complex with Matroid

Matroid’s no-code computer vision platform is designed to bridge the gap between power and usability. By integrating AI-assisted annotations, structured annotation workflows, and progressive feature disclosure, we empower users to create high-quality CV models with less friction.

Building an effective UX for computer vision isn’t just about making annotation easier—it’s about structuring the entire model development pipeline in a way that allows users to focus on high-value decisions rather than manual tasks. With the right tools, even the most complex CV workflows can become intuitive and efficient. With these UX principles in mind, no-code computer vision platforms can empower users of all backgrounds to build accurate and efficient models without deep technical expertise.

About the Author

Andrew Ellison is a Fullstack Software Engineer at Matroid with a background in aerospace composite materials. He is passionate about translating complex data into effective visualizations and moonlights as a Ukulele player.

Building Custom Computer Vision Models with Matroid

Dive into the world of personalized computer vision models with Matroid's comprehensive guide – click to download today